LAX Bot

Project Description

As a dedicated mechanical engineering student at Northwestern University with a specialization in Robotics, I spearheaded the Lacrosse Goalie Robot project for the Northwestern Robotics Club. This cutting-edge project aimed to develop a robot capable of tracking and intercepting a lacrosse ball in real time, showcasing my expertise in high-speed motion tracking and real-time analytics.

In this project, I led the design and implementation of a novel 3D ball-tracking system. Faced with the challenge of tracking a lacrosse ball moving at approximately 70 mph, I innovated by transitioning from traditional neural network methods to a more efficient color mask-based approach with contour-finding algorithms, significantly enhancing processing speed (seconds to milliseconds). A key aspect of my leadership was developing an algorithm to precisely calculate the ball's Z-coordinate, a critical factor in accurate 3D tracking. Originally, I used a monovision system and estimated the Z coordinate with the radius of the detected ball. I have begun to transition the team to a stereovision system using epipolar geometry to make more accurate Z predictions. This work not only demonstrated my technical proficiency in Python, curve fitting, and regression analysis but also highlighted my ability to quickly adapt to new technologies and troubleshoot complex problems.

My role in this project went beyond technical skills, encompassing team leadership and project management. I effectively coordinated team efforts, set clear goals, and ensured timely progress, demonstrating my capacity to lead challenging projects in fast-paced environments.

This experience underlines my readiness to contribute meaningfully to innovative projects in the robotics and AI fields. I bring a combination of technical expertise, problem-solving skills, and leadership experience, making me a valuable asset to any forward-thinking organization in the engineering sector.

Ball Detection

A color mask in conjunction with an orange LAX ball was implemented to detect the position of the ball in the frame. To accomplish, this my team and I wrote a script to automatically find the proper hsv (Hue, Saturation, Value) ranges to be implemented in the code. See the video below for the implementation.

The binary mask was then fed into a contour detection algorithm to find the position and radius of the ball.

Monovision System Transform 2D -> 3D

The data outputted from the ball detection system must be transformed into real-world coordinates. My approach was to gather as much data as possible and visually inspect it for notable trends. See the graphs below.

Upon reviewing the data, the left graph comparing x-pixel and x-real showed that there was a correlation between the two variables, but other factors were in play as shown by an increasing variance towards the extremes of the x range. The middle graph provided a great visualization of the relationship between the 3 dimensions. This led me to believe that the other dimensions would need to be taken into account. From the data shown in the right graph, it seemed to fit with an arctan distribution.

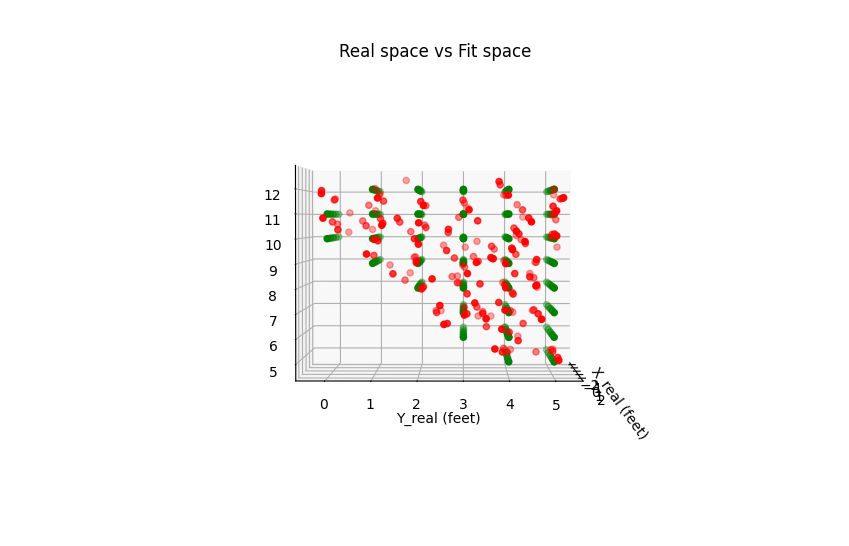

To accurately create these transforms, I wrote a Python script using scipy optimization to find the coefficients that would fit to the data. See the results below.

The error in the Z coordinate transform is quite large and makes planning the trajectory and detecting the ball in flight quite difficult.

Monovision Trajectory Planning and Flight Detection

Now that the 3D coordinates of the ball are available, it is time to return an end position of the ball when it is about to go through the plane of the goal. To accomplish this I derived the velocities and accelerations of the ball. When the ball had the y acceleration that aligned with gravity (~32 ft/s^2) within some tolerance, the ball was in the air. If this remains the case over several data points then the ball’s future trajectory can be plotted by extending the parabola using the same x and z velocities and changing the y velocity as gravity acts on the ball. See the full monovision code in the link below.

The flight detection and trajectory planning steps never made much since to continue to pursue since the quality of the data was so poor.

Stereovision Ball Detection

Much improvement has been made to the structure of the code base. It is now using a much cleaner class-based approach. Using the same color masking and ball detection algorithms as the monovision system, see the current state of the project below.

Next Up:

Gather data and make updated fit from pixel space to real space with the Stereo Vision system

Implement Stereo image rectification using epipolar lines

Add Kalman Filtering to adaptively assess new data points

Add User Interface to increase usability

Last updated 12/14/2023

Go see the latest updates on the project at our GitHub:

https://github.com/NURobotics/NURC-LAX-24